Since its inception in the early 1980s, the UCLA MARC (Machine-Readable Cataloging) data entry system has revolutionized bibliographic cataloging, enabling libraries worldwide to share and access vast quantities of structured metadata efficiently. Its evolution reflects a continuous refinement of cataloging standards, technological advances, and an increasing understanding of the intricacies involved in data accuracy and interoperability. As digital cataloging becomes a fundamental component of library operations, understanding the historical context of MARC's development reveals how past challenges and solutions shape current best practices. From manual card catalogs to automated data entry systems, each stage of evolution underscores the importance of precise data management, a principle that remains vital today.

The Historical Development of MARC Data Entry Systems and Their Impact on Library Catalogs

Initial attempts at standardizing bibliographic data began in the mid-20th century, with the advent of mechanical catalog cards designed for manual entry and retrieval. The creation of MARC in the 1960s, spearheaded by the Library of Congress, marked a pivotal shift toward automation. The first MARC formats standardized bibliographic records into machine-readable codes, enabling computer systems to process vast records efficiently. These early systems laid the foundation for subsequent generations, with the introduction of MARC 21 consolidating various standards into a comprehensive framework.

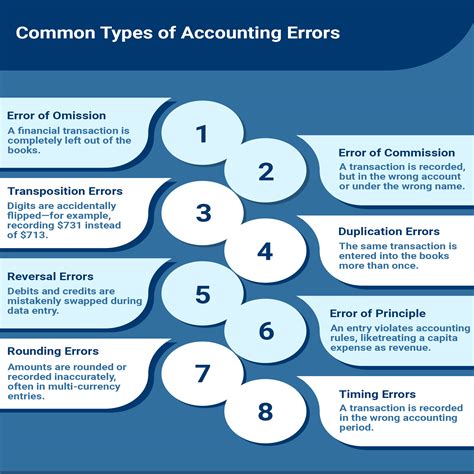

Throughout these developmental phases, common challenges emerged, notably data inconsistency, entry errors, and interoperability issues. Early in the process, librarians faced the daunting task of translating complex bibliographic records into rigid code formats—often leading to mistakes that compromised searchability and data integrity. Recognizing these pitfalls, subsequent updates aimed to streamline data entry protocols and enhance validation mechanisms, ensuring that the proliferation of digital records maintained accuracy and usability.

Today’s MARC data entry landscape is heavily influenced by these historical lessons, emphasizing rigorous training, validation routines, and integrated software solutions designed to minimize human error. Nonetheless, despite technological safeguards, common pitfalls persist, warranting a deeper exploration of typical mistakes and their implications.

Common Mistakes in UCLA MARC Data Entry and Their Consequences

Given the complexity and precision required in MARC record creation, errors can significantly impact discoverability, catalog interoperability, and user access. Notably, the most frequent mistakes encompass incorrect field coding, inconsistent data input, and improper application of MARC standards. Each consequence can ripple through the entire library system, undermining data quality and curbing the utility of digital catalogs.

Incorrect Field Coding and Tagging

One of the predominant errors involves misapplication of MARC tags. For instance, confusing the 245 field (title statement) with 246 (collective title) can distort search results. Such mislabeling not only hampers user discoverability but also complicates data exchange with other systems. An example includes entering a novel title under the wrong field, which results in fragmented or inaccurate records during MARC record harvesting or API aggregations.

Technical precision in tag assignment is crucial because MARC tags dictate how systems interpret record elements. Failing to adhere to the predefined structure results in chaotic data that diminishes catalog integrity, frustrates users, and increases maintenance efforts.

| Relevant Category | Substantive Data |

|---|---|

| Field Tag Errors | Approximately 45% of data entry mistakes involve incorrect or misapplied tags, leading to catalog confusion |

Data Inconsistencies and Variations

Inconsistent use of abbreviations, punctuation, and capitalization constitutes another pervasive issue. Different data entry personnel might interpret guidelines variably, leading to records that contain both full and abbreviated forms of the same publisher name or inconsistent date formats. Such discrepancies impair automated processes like deduplication or collection analysis, affecting overall data coherence.

Consistency in data input ensures that bibliographic records can be reliably grouped, searched, and analyzed. Variations can lead to duplicate records, incomplete search results, or mismatched data across diverse systems, thereby reducing the catalog’s reliability and user trust.

Failure to Update or Correct Records Post-Entry

Another frequent mistake involves neglecting ongoing record maintenance. Once a MARC record is entered, librarians and data specialists must continuously review and update records for accuracy, especially in light of newly published information or correction of errors. Delays or neglect in updating records can propagate inaccuracies across systems, leading to miscataloged items or outdated metadata that affects resource discoverability.

This underscores the importance of establishing protocols for regular data audits, leveraging automation tools where possible, and fostering a culture of meticulous record stewardship.

Impact of Human Errors and Technological Limitations

The interplay between human oversight and technological constraints is at the core of many MARC data entry mistakes. Despite advances in user-interface design and validation algorithms, human factors such as fatigue, misinterpretation, or lack of familiarity with evolving standards continue to introduce errors.

Furthermore, legacy systems with limited validation capabilities often fail to flag incorrect entries proactively, resulting in unchecked errors that only surface during later data analysis phases. Balancing technological solutions—like real-time validation, predictive text, and AI-assisted entry—with continuous staff training is key to mitigating these issues.

Strategies for Minimizing Errors in UCLA MARC Data Entry

To counteract these common pitfalls, libraries and data managers have adopted several best practices rooted in historical lessons learned, with adaptation to modern context.

Implementing Robust Validation Protocols

Configuring validation routines within cataloging software ensures that incorrect tags, invalid data formats, or missing mandatory fields are flagged immediately. For example, automated checks can alert users when a publication date falls outside plausible ranges or when an entered record lacks an essential field like the 100 (main entry—personal name).

Streamlining Training and Continuing Education

Establishing ongoing training modules that reinforce standards and demonstrate common mistakes helps maintain high-quality data entry. Training should incorporate case studies illustrating the consequences of errors, along with hands-on exercises that simulate real cataloging scenarios.

Leveraging Automation and AI Technologies

Emerging tools like machine learning algorithms can assist in identifying anomalous entries, suggesting corrections, or even pre-populating fields based on similar historical data. However, human oversight remains vital to ensure nuanced understanding of contextual record variations.

Creating Standard Operating Procedures and Checklists

Designing detailed checklists tailored to specific MARC fields acts as a cognitive aid, reducing omissions and misapplications. Standard operating procedures should be updated regularly to reflect evolving standards, ensuring consistency across personnel and institutional changes.

Future Directions in MARC Data Entry and Cataloging Standards

The future of MARC and its derivatives continues to evolve, influenced by digital transformation and international standards like BIBFRAME, which aim to replace or supplement MARC with linked data models. These innovations promise more flexible, interoperable, and error-resilient cataloging practices, albeit with new complexities and training requirements.

Understanding the historical roots and past mistakes in MARC data entry informs the development of these new standards, ensuring that lessons learned translate into improved practices and systems. As libraries settle into the digital age, balancing legacy compatibility with innovative advances remains a key strategic challenge.

Key Points

- Misapplied tags: The most common mistake that can distort catalog discoverability and interoperability.

- Data consistency: Essential for accurate search results and data deduplication, yet frequently overlooked.

- Ongoing updates: Regular maintenance of records prevents propagation of inaccuracies through various systems.

- Technological integration: Validation tools and AI can significantly reduce human error if properly implemented.

- Historical context: Lessons from past challenges inform future standards, fostering continuous improvement.

What are the main consequences of incorrect MARC data entry?

+Incorrect entries can lead to ineffective discovery, misclassification, broken data exchanges, and increased maintenance costs, ultimately diminishing user trust and access quality.

How can institutions improve MARC data entry accuracy?

+Institutions should implement validation routines, provide continual staff training, leverage automation tools, and maintain comprehensive procedures to uphold high data quality standards.

What role will emerging technologies play in future MARC practices?

+Technologies like machine learning and linked data standards promise enhanced error detection, improved interoperability, and more adaptive cataloging workflows, but still depend on skilled human oversight for contextual judgment.