Consider a seasoned pharmacologist sitting in their cluttered lab, reviewing centuries-old handwritten records and juxtaposing them with modern digital dosage calculators. The evolution of dosage calculation practices reflects not merely technological advancement but an ongoing quest for precision, safety, and efficacy in medication administration. As we unravel this intricate history, it becomes clear that each innovation—be it manual, analog, or digital—has been a pivotal response to the complex demands of therapeutics, patient safety, and clinical outcomes. This narrative explores the journey from primitive estimation methods rooted in empirical observation to today's sophisticated algorithms powered by artificial intelligence, emphasizing key milestones and the professional principles guiding this evolution.

Foundations of Dosage Calculation: From Empirical Origins to Early Quantitative Methods

The origins of dosage calculation can be traced to ancient civilizations, where herbal remedies and rudimentary dosages were informed by tradition and experiential knowledge. The earliest documented efforts to render medicine dosing more scientific appeared in texts from ancient Egypt and Greece, emphasizing empirical observations over precise measurement. For example, Hippocrates and Galen utilized qualitative assessments rather than quantitative precision, relying on trial, error, and anecdotal evidence. This approach persisted well into medieval times, where apothecaries resorted to bulk measurements or approximations based on body weight or age.

The Renaissance era marked a turning point in the early 17th century, as the advent of more systematic scientific inquiry prompted a gradual shift toward quantitative methods. Pioneers such as Paracelsus emphasized titration and specific measurements, albeit in very limited scopes. Yet, the need for standardized dosing remained unmet until the rise of pharmacology as a formal discipline in the 19th century, with the development of pharmacopoeias and standardization efforts.

The Birth of Systematic Dosage Calculation in the 19th and Early 20th Centuries

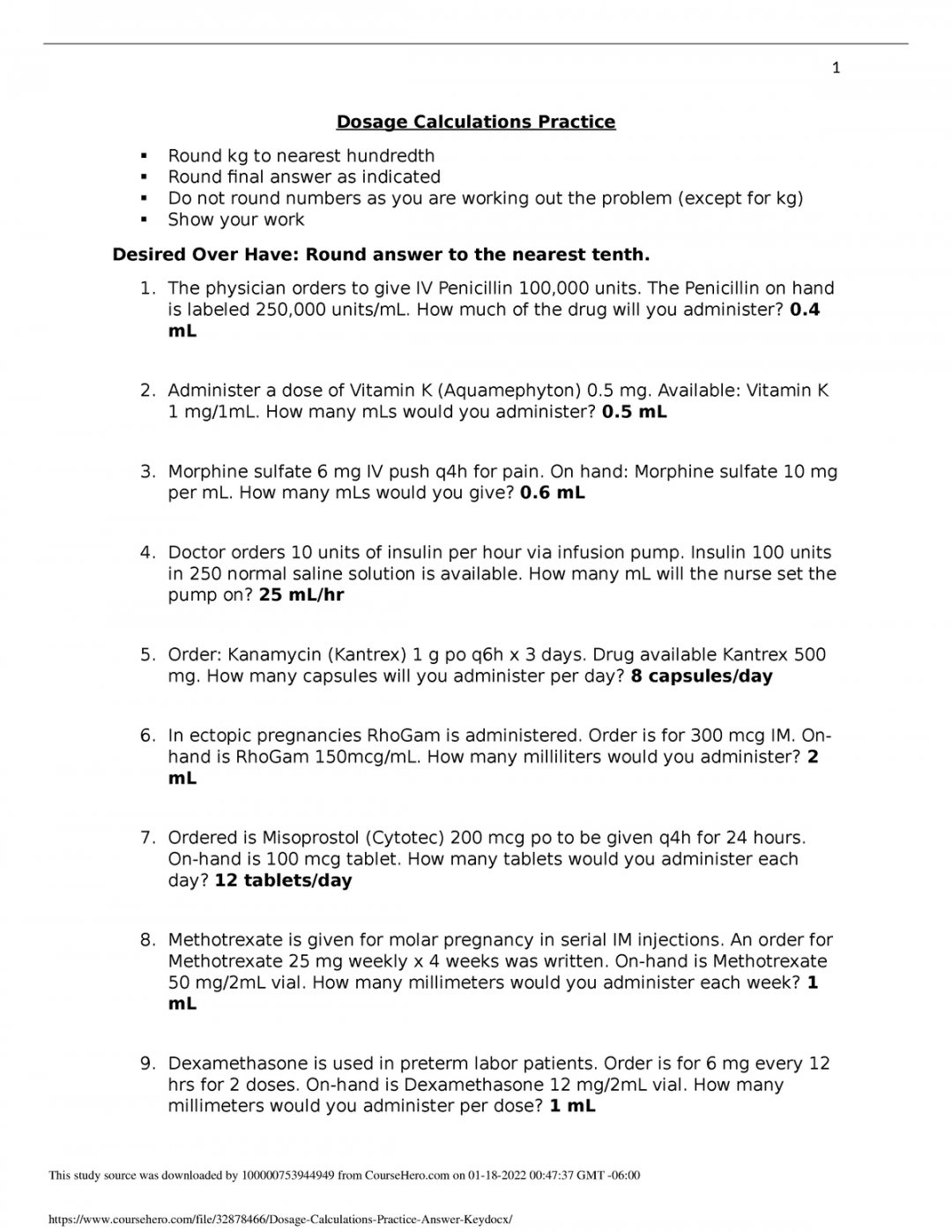

The 19th century witnessed a burgeoning recognition of the importance of precise dosage to optimize therapeutic outcomes while minimizing toxicity. During this period, practitioners employed ratio-based methods rooted in body weight, age, and severity of condition. The advent of chemical analysis and the discovery of therapeutic windows fostered the development of more accurate calculation tools. However, reliance on estimations still characterized routine practice, with clinicians often manually deriving dosages from tables or formulas derived from observational data.

An illustrative example is the introduction of Clark’s Rule in the late 19th century, which estimated pediatric doses based on adult pharmacotherapy scaled by weight or surface area. While innovative for its time, Clark’s Rule and similar methods suffered from limitations regarding individual variability, comorbidities, and pharmacokinetic factors.

| Category | Data |

|---|---|

| Sample Calculation Method | Clark’s Rule (pediatric dose = (weight in pounds / 150) x adult dose) |

| Limitations | Assumes proportional pharmacokinetics across age groups, ignoring metabolic differences |

Transition to Computational Aids: Mechanical and Electronic Calculators

The mid-20th century heralded the era of mechanical and electronic calculators, significantly transforming dosage determination. Simple slide rules evolved into dedicated electronic devices, enabling rapid calculations that minimized human error. For example, the introduction of the first medical calculators in the 1950s brought basic functions such as drug dilution calculations and body surface area estimations to clinicians at the bedside.

These devices, while limited in scope, improved accuracy in complicated calculations, especially in pediatric and critical care settings. Their significance lay in reducing calculation mistakes and increasing throughput in busy hospital environments. Such technological progression proved instrumental for expanding dosage calculation into more complex domains that previously depended solely on manual methods.

Digital Revolution: Software and Algorithmic Calculations

The advent of personal computers in the late 20th century revolutionized dosage calculation practices. Early software prototypes, often custom-designed by clinical pharmacologists or hospital IT teams, encapsulated formulas, tables, and algorithms, allowing for instantaneous computation. These programs began to incorporate pharmacokinetic models, absorption rates, and drug interactions, transitioning from simple scaling methods to predictive, individualized dosing regimes.

Modern Electronic Health Records (EHRs) integrated dosage calculators directly into patient management workflows, embedding clinical decision support systems (CDSS) that alert pharmacists and physicians to potential dosing errors or contraindications. For example, the integration of the Cockcroft-Gault equation in renal dosing calculators exemplifies how nephrotoxic risk management became embedded into routine practice.

| Technique | Impact |

|---|---|

| Pharmacokinetic modeling | Enhanced individualized dosing, accounting for clearance and volume of distribution |

| Clinical decision support systems | Reduced medication errors by providing real-time alerts and recommendations |

Current State: Artificial Intelligence and Machine Learning in Dosage Optimization

In recent years, artificial intelligence (AI) and machine learning (ML) techniques have begun to push dosage calculation into a new frontier. These models harness vast datasets—spanning genetic information, pharmacogenomics, and real-time clinical parameters— to tailor therapies at an individual level. For instance, AI algorithms analyze patient-specific variables such as age, weight, renal function, genetic polymorphisms, and medication history to generate highly personalized dosage recommendations.

A comprehensive case study illustrating this progression involves a biotech startup collaborating with oncology clinics to optimize chemotherapeutic dosing, dramatically reducing adverse effects and improving outcomes. Their AI platform integrates pharmacogenomic data, pharmacokinetic simulation, and adaptive learning to continually refine dosage recommendations, exemplifying the culmination of centuries of incremental innovation.

Implications and Challenges of Modern Dosage Calculation Practices

While technological advances offer unprecedented precision, they also introduce challenges related to data quality, algorithm transparency, and clinical integration. Errors in input data, lack of standardized validation, and potential biases embedded in ML models pose risks to patient safety. Moreover, the shift from manual to automated systems necessitates ongoing training for clinicians to interpret algorithmic recommendations critically, ensuring they complement clinical judgment rather than replace it.

Additionally, regulatory oversight now encompasses software validation, requiring rigorous testing and validation protocols reminiscent of pharmaceutical standards. The European Union's MDR (Medical Device Regulation) and the FDA's guidelines on clinical decision support tools exemplify evolving frameworks aimed at safeguarding efficacy and safety.

Key Points

- Evolution from empirical to algorithm-driven dosing reflects technological progress and a focus on personalized medicine.

- Early manual methods laid a foundation but lacked individual variability considerations, tackled now by pharmacogenomics and AI.

- Technological innovations significantly reduce medication errors but require vigilant validation and clinician oversight.

- Integration of AI in dosage practices exemplifies the convergence of big data, machine learning, and clinical pharmacology.

- The future of dosing practices hinges on balancing innovation with safety, transparency, and clinician training.

What were the earliest methods used for drug dosage calculation?

+Initially, dosage was estimated based on empirical observations, body weight, age, and traditional practices, with limited scientific underpinning. Earlier civilizations relied on qualitative judgments and rough measurements, evolving gradually into standardized tables and rules like Clark’s Rule in the late 19th century.

How did technological innovations influence dosage calculation practices?

+From mechanical calculators in the mid-20th century to sophisticated software with pharmacokinetic modeling today, technology has enhanced accuracy, speed, and individualized dosing. Electronic health records and decision support systems now embed complex algorithms integrating patient data, reducing errors and optimizing therapy.

What are current challenges facing modern dosage calculation systems?

+Key challenges include ensuring data quality, algorithm transparency, clinician acceptance, regulatory compliance, and mitigating biases. While AI offers personalized solutions, validation, interpretability, and integration into clinical workflows remain critical concerns.